Here, we are going to discuss load balancing and understand what are the types of load balancing and how load balancing works. Let’s get started

Before that let’s go thru with the System Design topics that we covered so far.

Modern System Design – Abstraction

Modern System Design – Non-functional System Characteristics

Building Blocks for Modern System Design

Domain Name System and How it works?

Why we need load balancing:

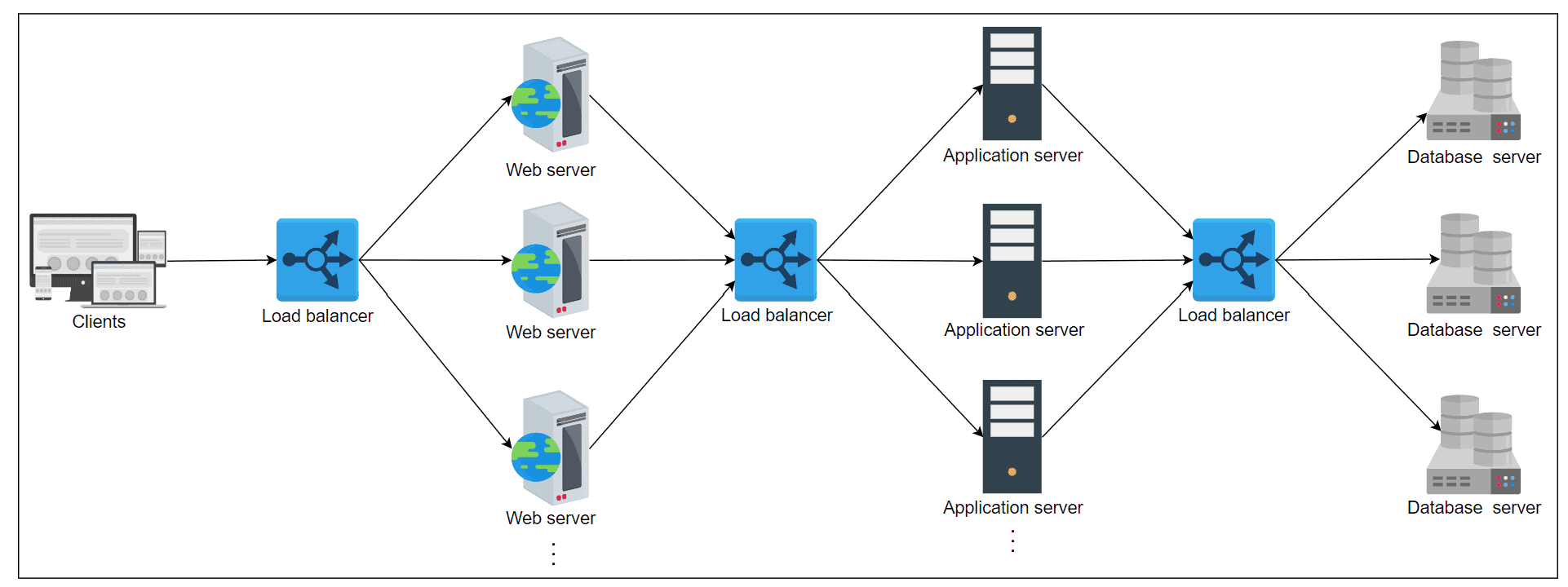

Millions of requests could arrive per second in a typical data center. To serve these requests, thousands (or a hundred thousand) servers work together to share the load of incoming requests.

The load balancing layer is the first point of contact within a data center after the firewall. A load balancer may not be required if a service entertains a few hundred or even a few thousand requests per second.

However, for increasing client requests, load balancers provide the following capabilities:

- Scalability: By adding servers, the capacity of the application/service can be increased seamlessly. Load balancers make such upscaling or downscaling transparent to the end users.

- Availability: Even if some servers go down or suffer a fault, the system still remains available. One of the jobs of load balancers is to hide faults and failures of servers.

- Performance: Load balancers can forward requests to servers with a lesser load so the user can get a quicker response time. This not only improves performance but also improves resource utilization.

Load Balancing Algorithms:

Different load balancing algorithms provide different benefits; the choice of load balancing method depends on your needs:

- Round Robin — Requests are distributed across the group of servers sequentially.

- Least Connections — A new request is sent to the server with the fewest current connections to clients. The relative computing capacity of each server is factored into determining which one has the least connections.

- Least Time — Sends requests to the server selected by a formula that combines the fastest response time and fewest active connections. Exclusive to NGINX Plus.

- Hash — Distributes requests based on a key you define, such as the client IP address or the request URL. NGINX Plus can optionally apply a consistent hash to minimize redistribution of loads if the set of upstream servers changes.

- IP Hash — The IP address of the client is used to determine which server receives the request.

- Random with Two Choices — Picks two servers at random and sends the request to the one that is selected by then applying the Least Connections algorithm (or for NGINX Plus the Least Time algorithm, if so configured).

The most commonly used is Consistent hashing,

Consistent Hashing

The hash function and modulo (%)

All incoming requests, which will have a unique identifier (e.g. IP address), are assumed to be uniformly random.

Using a hash function, we are able to obtain an output value, after which we apply the modulo function to get the number that corresponds to the server that the load balancer should be directing the request to.

- hash(ipAddress) → output

- Output % number of servers -1 → server ID

It is important to use a good hash function to ensure that the output values are spread out across a range of values to improve the randomness. The modulo function then guarantees that the server ID is in the range of 0.(Number of servers -1.)

Benefits of Load Balancing

- Reduced Downtime

- Scalable

- Redundancy

- Flexibility

- Efficiency

- Global Server Load Balancing

Frequently Asked Questions & Answers:

What if load balancers fail? Are they, not a single point of failure (SPOF)?

Load balancers are usually deployed in pairs as a means of disaster recovery. If one load balancer fails, and there’s nothing to failover to, the overall service will go down. Generally, to maintain high availability, enterprises use clusters of load balancers that use heartbeat communication to check the health of load balancers at all times. On failure of the primary LB, the backup can take over. But, if the entire cluster fails, manual rerouting can also be performed in case of emergencies.

Thank you for reading. We hope this gives you a good understanding. Explore our Technology News blogs for more news related to the Technology front. AdvanceDataScience.Com has the latest in what matters in technology daily. Also, you can find more articles on System design here.